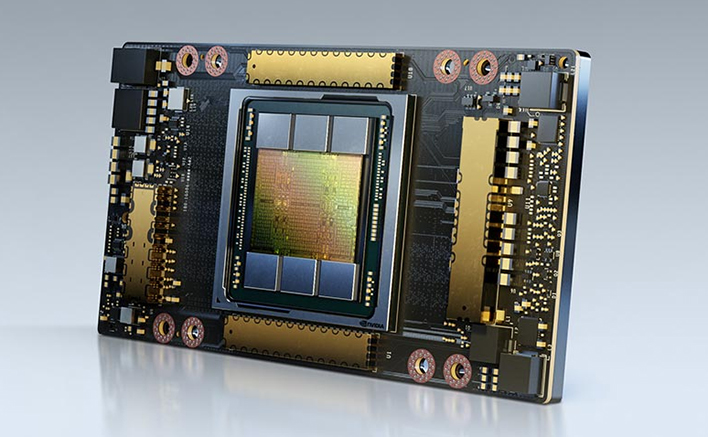

NVIDIA A800 Enterprise 80GB PCIe

Two months after it was banned by US government from selling high-performance AI chips to China, NVIDIA has introduced a new A800 GPU designed to bypass those restrictions.

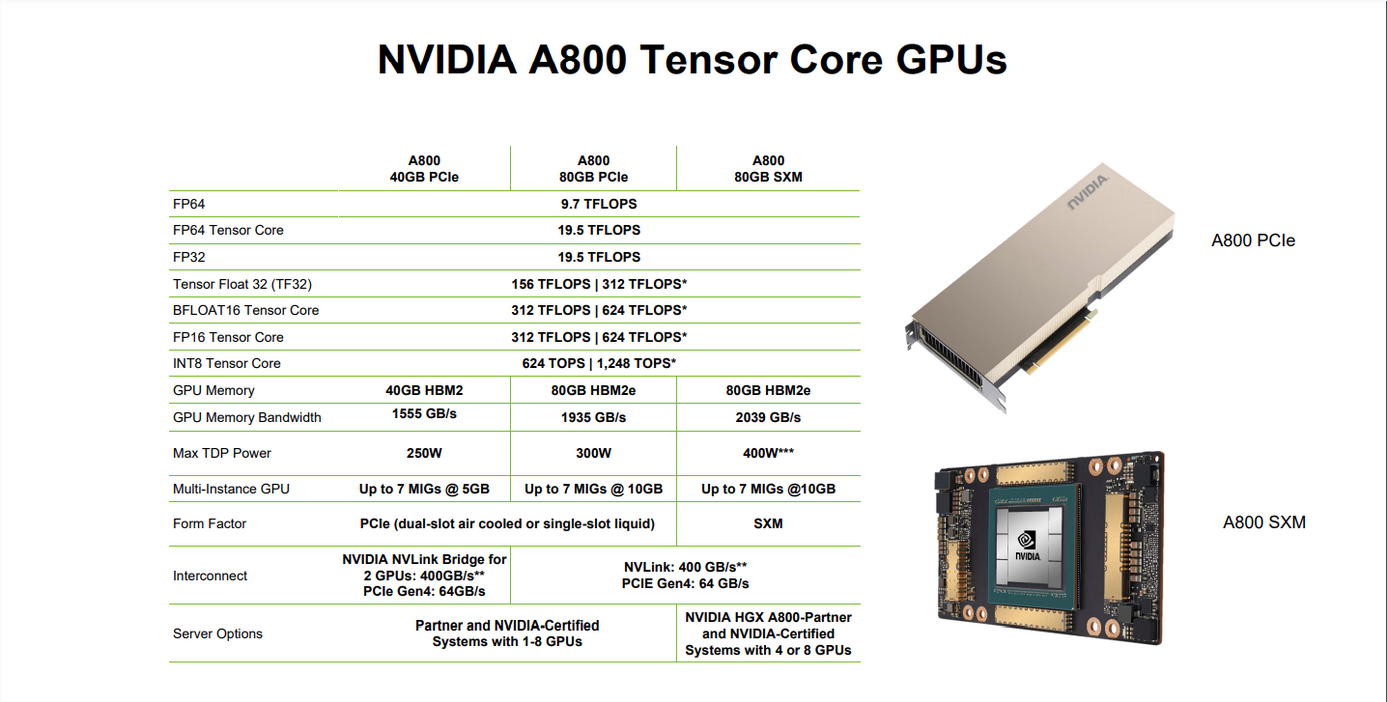

The new NVIDIA A800 is based on the same Ampere microarchitecture as the A100, which was used as the performance baseline by the US government.

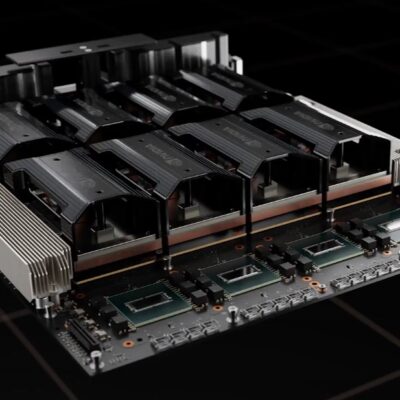

Despite its numerically larger model number (the lucky number 8 was probably picked to appeal to the Chinese), this is a detuned part, with slightly reduced performance to meet export control limitations and the 33% reduced NVLINK speed is hardly noticeable. It can only be stacked to 8-GPUs per system, not 16 like the A100. This card is the industry standard and premier value choice for ever demanding high-end AI and CGI workloads.

Ships in 10 days from payment. Limited allocations available. 3-year warranty included. All sales final. No returns or cancellations.

Description

| Specifications | A100 80GB PCIe |

A800 80GB PCIe |

| FP64 | 9.7 TFLOPS | |

| FP64 Tensor Core | 19.5 TFLOPS | |

| FP32 | 19.5 TFLOPS | |

| Tensor Float 32 | 156 TFLOPS | |

| BFLOAT 16 Tensor Core | 312 TFLOPS | |

| FP16 Tensor Core | 312 TFLOPS | |

| INT8 Tensor Core | 624 TOPS | |

| GPU Memory | 80 GB HBM2 | |

| GPU Memory Bandwidth | 1,935 GB/s | |

| TDP | 300 W | |

| Multi-Instance GPU | Up to 7 MIGs @ 10 GB | |

| Interconnect | NVLink : 600 GB/s PCIe Gen4 : 64 GB/s |

NVLink : 400 GB/s PCIe Gen4 : 64 GB/s |

| Server Options | 1-8 GPUs | |

| Specifications | A100 40GB PCIe |

A800 40GB PCIe |

| FP64 | 9.7 TFLOPS | |

| FP64 Tensor Core | 19.5 TFLOPS | |

| FP32 | 19.5 TFLOPS | |

| Tensor Float 32 | 156 TFLOPS | |

| BFLOAT 16 Tensor Core | 312 TFLOPS | |

| FP16 Tensor Core | 312 TFLOPS | |

| INT8 Tensor Core | 624 TOPS | |

| GPU Memory | 40 GB HBM2 | |

| GPU Memory Bandwifth | 1,555 GB/s | |

| TDP | 250 W | |

| Multi-Instance GPU | Up to 7 MIGs @ 10 GB | |

| Interconnect | NVLink : 600 GB/s PCIe Gen4 : 64 GB/s |

NVLink : 400 GB/s PCIe Gen4 : 64 GB/s |

| Server Options | 1-8 GPUs | |