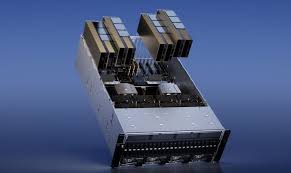

NVIDIA H100 NVL HBM3 94GB 350W

The H100 NVL has a full 6144-bit memory interface (1024-bit for each HBM3 stack) and memory speed up to 5.1 Gbps. This means that the maximum throughput is 7.8GB/s, more than twice as much as the H100 SXM. Large Language Models require large buffers and higher bandwidth will certainly have an impact as well.

NVIDIA H100 NVL for Large Language Model Deployment is ideal for deploying massive LLMs like ChatGPT at scale. The new H100 NVL with 96GB of memory with Transformer Engine acceleration delivers up to 12x faster inference performance at GPT-3 compared to the prior generation A100 at data center scale.

Ships in 5 days from payment.

Description

NVIDIA Announces Its First Official ChatGPT GPU, The H100 NVL With 96 GB HBM3 Memory

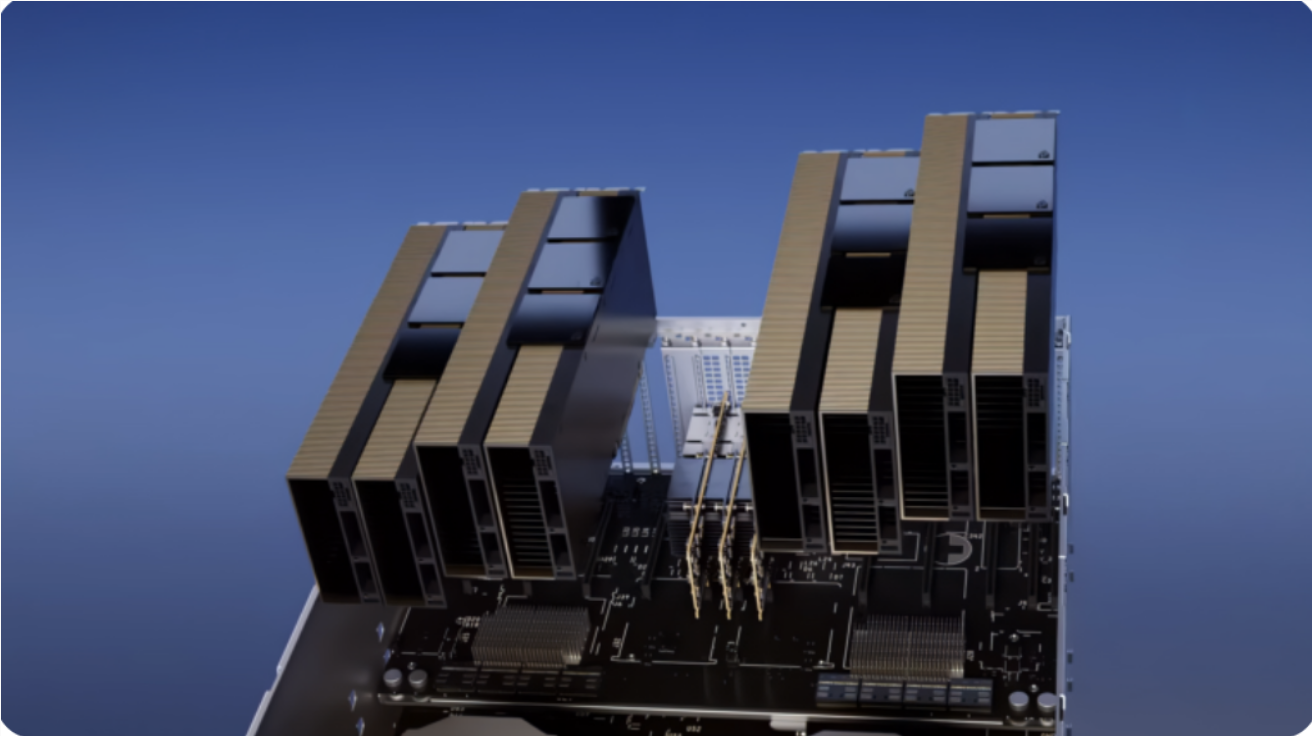

The NVIDIA GPU-powered H100 NVL graphics card is said to feature a dual-GPU NVLINK interconnect with each chip featuring 96 GB of HBM3e memory. The GPU is able to process up to 175 Billion ChatGPT parameters on the go. Four of these GPUs in a single server can offer up to 10x the speed up compared to a traditional DGX A100 server with up to 8 GPUs.

NVIDIA launches its first dual-GPU in years

just not for gamers

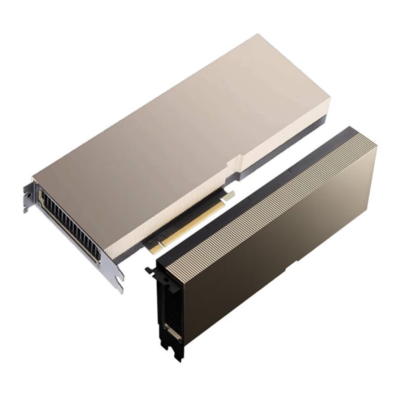

The H100 NVL represents the best bin in the NVIDIA Hopper lineup. It is a technical variant of the H100 data-center accelerator designed specifically for one purpose, to boost Al language models, such as Chat-GPT.

In short, the NVL stands for NVLink which is used by this configuration on the H100 GPU. The H100 NVL is not one GPU but a dual-GPU option of two PCIe cards connected with each other through three NVLink Gen4 bridges.

But the NVL variant has another advantage over existing H100 GPUs – memory capacity. This GPU uses all six stacks of HBM3 memory offering a total of 188 GB of high-speed buffer. This is an unusual capacity that indicates only 94GB is available on each GPU, not 96 GB.

NVIDIA H100 NVL

Max Memory Server Card for Large Language Models

NVIDIA is touting the H100 NVL as offering 12x the GPT3-175B inference throughput as a last-generation HGX A100 (8 H100 NVLs vs. 8 A100s). Which for customers looking to deploy and scale up their systems for LLM workloads as quickly as possible, is certainly going to be tempting. As noted earlier, H100 NVL doesn’t bring anything new to the table in terms of architectural features – much of the performance boost here comes from the Hopper architecture’s new transformer engines – but the H100 NVL will serve a specific niche as the fastest PCIe H100 option, and the option with the largest GPU memory pool.

| Specification | H100 SXM | H100 PCIe | H100 NVL^2 |

|---|---|---|---|

| FP64 | 34 teraFLOPS | 26 teraFLOPS | 68 teraFLOPS |

| FP64 Tensor Core | 67 teraFLOPS | 51 teraFLOPS | 134 teraFLOPS |

| FP32 | 67 teraFLOPS | 51 teraFLOPS | 134 teraFLOPS |

| TF32 Tensor Core | 989 teraFLOPS | 756teraFLOPS | 1,979 teraFLOPS’ |

| BFLOAT16 Tensor Core | 1,979 teraFLOPS | 1,513 teraFLOPS | 3,958 teraFLOPS |

| FP16 Tensor Core | 1,979 teraFLOPS | 1,513 teraFLOPS | 3,958 teraFLOPS |

| FP8 Tensor Core | 3,958 teraFLOPS | 3,026 teraFLOPS | 7,916 teraFLOPS |

| INT8 Tensor Core | 3,958 TOPS | 3,026 TOPS | 7,916 TOPS |

| GPU memory | 80GB | 80GB | 188GB |

| GPU memory bandwidth | 3.35TB/s | 2TB/s | 7.8TB/s |

| Decoders | 7 NVDEC | 7 NVDEC | 14 NVDEC |

| 7 JPEG | 7 JPEG | 14 JPEG | |

| Max thermal design power (TDP) | Up to 700W (configurable) | 300-350W (configurable) | 2x 350-400W (configurable) |

| Multi-Instance GPUs | Up to 7 MIGS @ 10GB each | Up to 7 MIGS @ 10GB each | Up to 14 MIGS @ 12GB each |

| Form factor | SXM | PCle | 2x PCIe |

| Interconnect | NVLink: 900GB/s PCIe Gen5: 128GB/s | Dual-slot air-cooled NVLink: 600GB/s PCIe Gen5: 128GB/s | Dual-slot air-cooled NVLink: 600GB/s PCIe Gen5: 128GB/s |

| Server options | NVIDIA HGX H100 Partner and NVIDIA-Certified Systems with 4 or 8 GPUs NVIDIA DGX H100 with 8 GPUS | Partner and NVIDIA-Certified Systems with 1-8 GPUs | Partner and NVIDIA-Certified Systems with 2-4 pairs |

| NVIDIA AI Enterprise | Add-on | Included | Add-on |