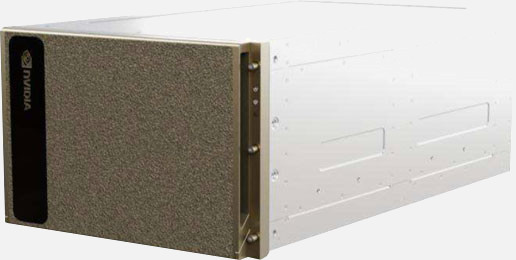

NVIDIA 8X DGX H100 Deep Learning Console

✓ Equipped with 8x NVIDIA H100 Tensor Core GPUs (SXM5)

✓ GPU memory totals 640GB

✓ Achieves 32 petaFLOPS FP8 performance

✓ Incorporates 4x NVIDIA® NVSwitch™

✓ System power usage peaks at ~10.2kW

✓ Employs Dual 56-core 4th Gen Intel® Xeon® Scalable processors

✓ Provides 2TB of system memory

✓ Offers robust networking, including 4x OSFP ports, NVIDIA ConnectX-7 VPI, and options for 400 Gb/s InfiniBand or 200 Gb/s Ethernet

✓ Features 10 Gb/s onboard NIC with RJ45 for management network, with options for a 50 Gb/s Ethernet NIC

✓ Storage includes 2x 1.9TB NVMe M.2 for OS and 8x 3.84TB NVMe U.2 for internal storage

✓ Comes pre-loaded with NVIDIA AI Enterprise software, NVIDIA Base Command, and a choice of Ubuntu, Red Hat Enterprise Linux, or CentOS operating systems

✓ Operates within a temperature range of 5–30°C (41–86°F)

✓ 3 year manufacturer parts or replacement warranty included

Description

NVIDIA DGX H100

The Gold Standard for AI Infrastructure

NVIDIA DGX H100 powers business innovation and optimization. The latest iteration of NVIDIA’s legendary DGX systems and the foundation of NVIDIA DGX SuperPOD™, DGX H100 is an AI powerhouse that features the groundbreaking NVIDIA H100 Tensor Core GPU. The system is designed to maximize AI throughput, providing enterprises with a highly refined, systemized, and scalable platform to help them achieve breakthroughs in natural language processing, recommender systems, data analytics, and much more. Available on-premises and through a wide variety of access and deployment options, DGX H100 delivers the performance needed for enterprises to solve the biggest challenges with AI.

Break Through the Barriers to AI at Scale

As the world’s first system with the NVIDIA H100 Tensor Core GPU, NVIDIA DGX H100 breaks the limits of AI scale and performance. It features 9X more performance, 2X faster networking with NVIDIA ConnectX®-7 smart network interface cards (SmartNICs), and high-speed scalability for NVIDIA DGX SuperPOD. The next-generation architecture is supercharged for the largest, most complex AI jobs, such as natural language processing and deep learning recommendation models.

Powered by NVIDIA Base Command

NVIDIA Base Command powers every DGX system, enabling organizations to leverage the best of NVIDIA software innovation. Enterprises can unleash the full potential of their DGX investment with a proven platform that includes enterprise-grade orchestration and cluster management, libraries that accelerate compute, storage and network infrastructure, and an operating system optimized for AI workloads. Additionally, DGX systems include NVIDIA AI Enterprise, offering a suite of software optimized to streamline AI development and deployment.

| Specification | Description |

|---|---|

| GPU | 8x NVIDIA H100 Tensor Core GPUs SXM5 |

| GPU memory | 640GB total |

| Performance | 32 petaFLOPS FP8 |

| NVIDIA® NVSwitch™ | 4x |

| System power usage | ~10.2kW max |

| CPU | Dual 56-core 4th Gen Intel® Xeon® Scalable processors |

| System memory | 2TB |

| Networking | 4x OSFP ports serving 8x single-port NVIDIA ConnectX-7 VPI; 400 Gb/s InfiniBand or 200 Gb/s Ethernet; 2x dual-port NVIDIA ConnectX-7 VPI; 1x 400 Gb/s InfiniBand; 1x 200 Gb/s Ethernet |

| Management network | 10 Gb/s onboard NIC with RJ45; 50 Gb/s Ethernet optional NIC; Host baseboard management controller (BMC) with RJ45 |

| Storage | OS: 2x 1.9TB NVMe M.2; Internal storage: 8x 3.84TB NVMe U.2 |

| Software | NVIDIA AI Enterprise – Optimized AI software; NVIDIA Base Command – Orchestration, scheduling, and cluster management; Ubuntu / Red Hat Enterprise Linux / CentOS – Operating system |

| Support | Comes with 3-year business-standard hardware and software support |

| Operating temperature range | 5–30°C (41–86°F) |